TL;DR Rachio should have included an extra antenna in their v2 sprinkler controller.

Context

Utah, like most of the western U.S., is experiencing a heat wave. Normally, September brings temperatures in the 75°F-85°F range. Instead, Salt Lake City saw a record tying 107°F this past week. I do keep tall fescue grass and mini clover in both my front and back yards and have a Rachio 2 8-zone sprinkler timer. It’s been a great timer for both the mobile app to configure the controller anywhere and has water-saving features like seasonal shift and individual zone programming.

However, the recent heat wave has been raising the temperature in my garage where the Rachio 2 is mounted on the wall. Even though there is an eero Wi-Fi access point only 30 feet away, the added heat is knocking the Rachio 2 off my Wi-Fi around 5pm and it will only re-join around 2am when the temperature goes down. I tried contacting Rachio support about this but they insisted it’s my Wi-Fi network that’s the issue. The controller itself is about 3 years old and during those years, I’ve never had any issue with it staying connected to my network. I’ve also not made any recent changes to the network’s hardware, firmware, or settings. It’s also probable that the controller itself is just getting old and needing to be replaced.

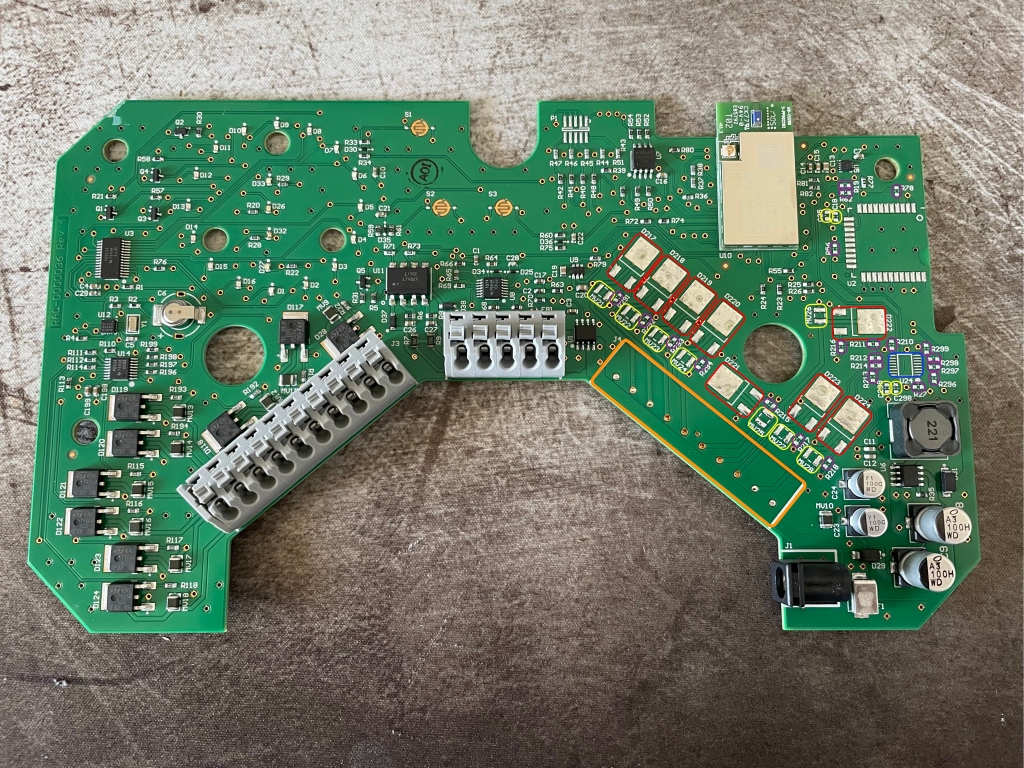

Since I’m a tinkerer though, I decided to take the controller off the wall and have a look on the inside. “Maybe I can rig a better heatsink inside,” I thought. Once I got the controller apart, I found some interesting things.

The Extra Space

Even though this is the board for the Rachio 2 8-zone controller, it appears to be the same board that would be used for the 16-zone controller minus a few parts. The surface mount pads for those omitted parts are even tinned with flux on them. I’m not implying that I or anyone could simply solder those parts and make this work like a 16-zone controller though. That is likely programmed in the microcontroller’s firmware. Speaking of…

The Brains

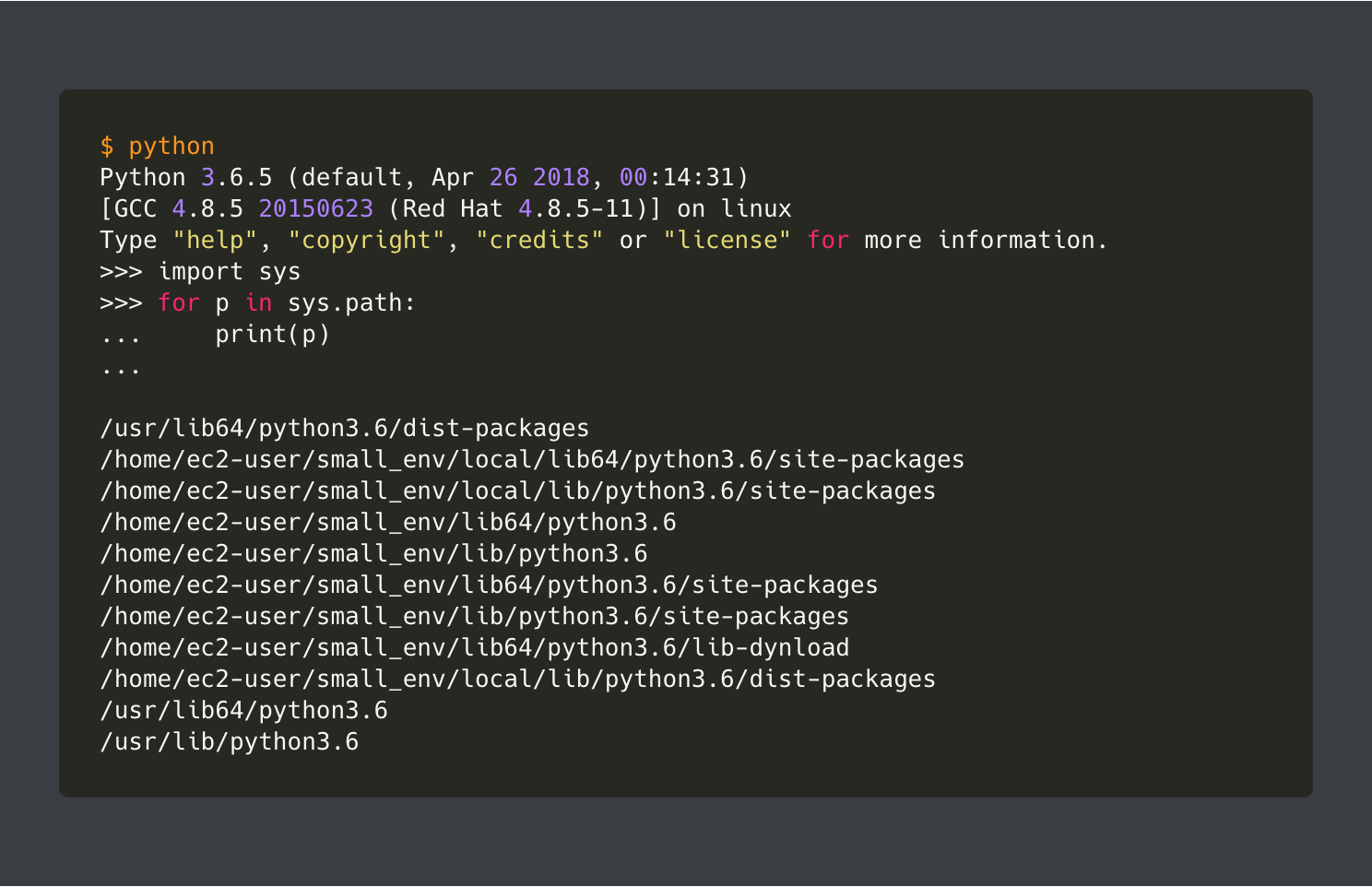

The core of the Rachio 2 is an AzureWave AW-CU288 (Cortex M3 200MHz, 802.11 b/g/n 2.4GHz, 3.3v). Seems like a decent microcontroller though I’m not an expert by any means. (I do know that it’s considered it obsolete now) What I’ve learned from online searches is that the AW-CU288 is capable of operating between -40°C to 80°C. The temps in my garage have been, at their peak, about 99°F (37.2°C). That could mean the internal temp of the Cortex M3 CPU is pushed near the edge of operating temperature. The controller’s EM shield does have a good enough surface to try a small heatsink. But there usually is a decent gap between soldered components and the under side of the EM shield. Before going down that road, I further inspected the board.

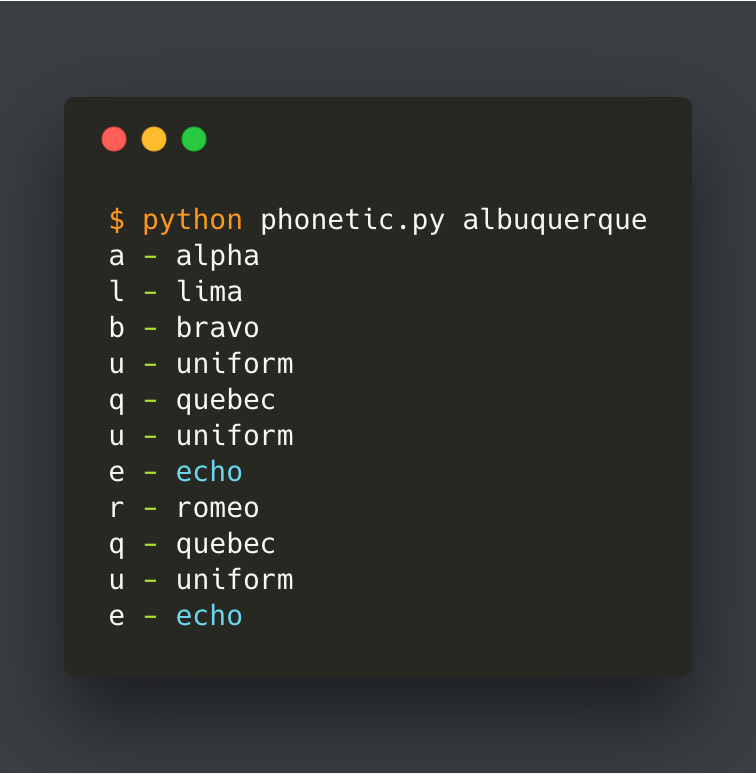

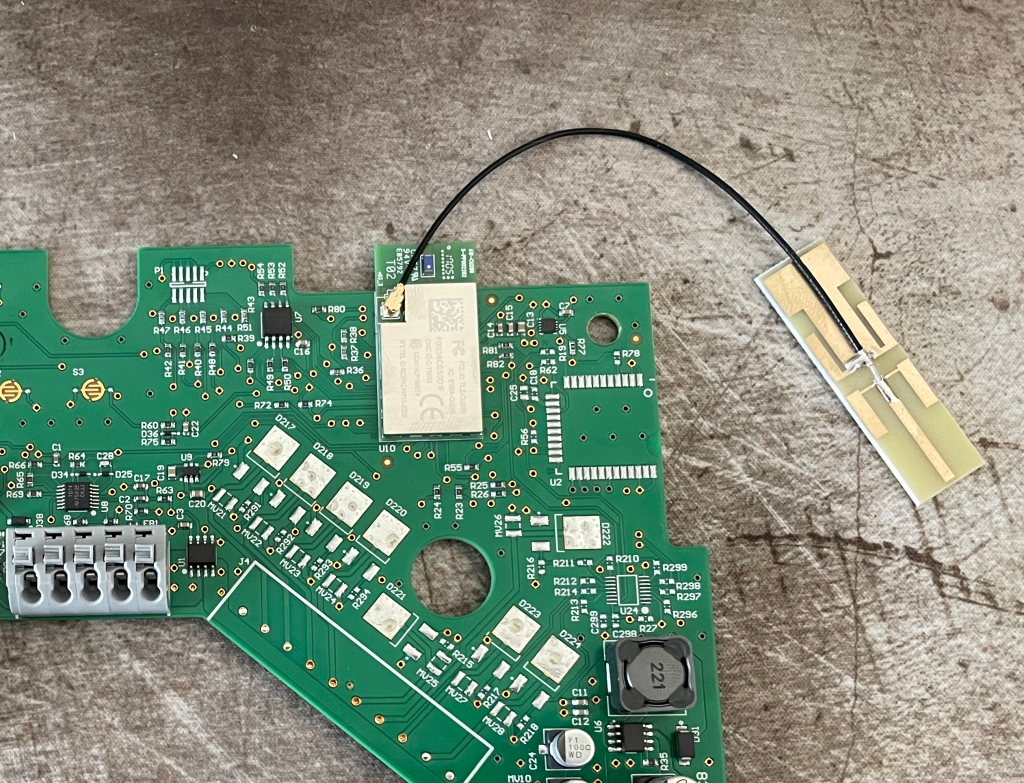

There is an integrated antenna that the FCC documents refer to as the “CHIP” antenna (FCC PDF). Considering I’ve never had Wi-Fi trouble in the past, that little CHIP antenna is pretty impressive. However, there is also a dedicated IPEX connector for a monopole/PIFA/dipole connector.

CHIP antenna

IPEX connector

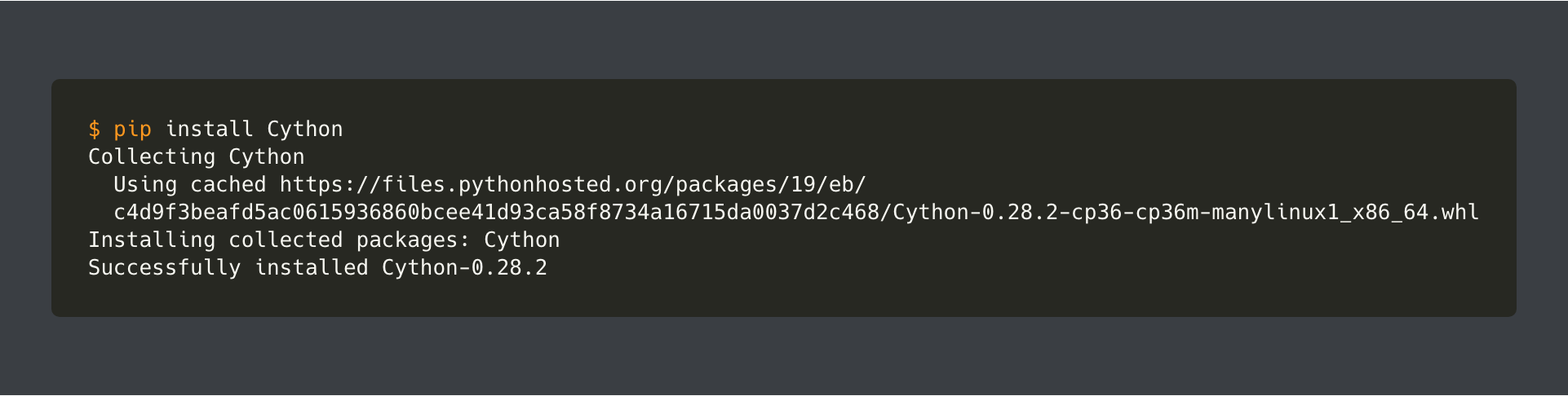

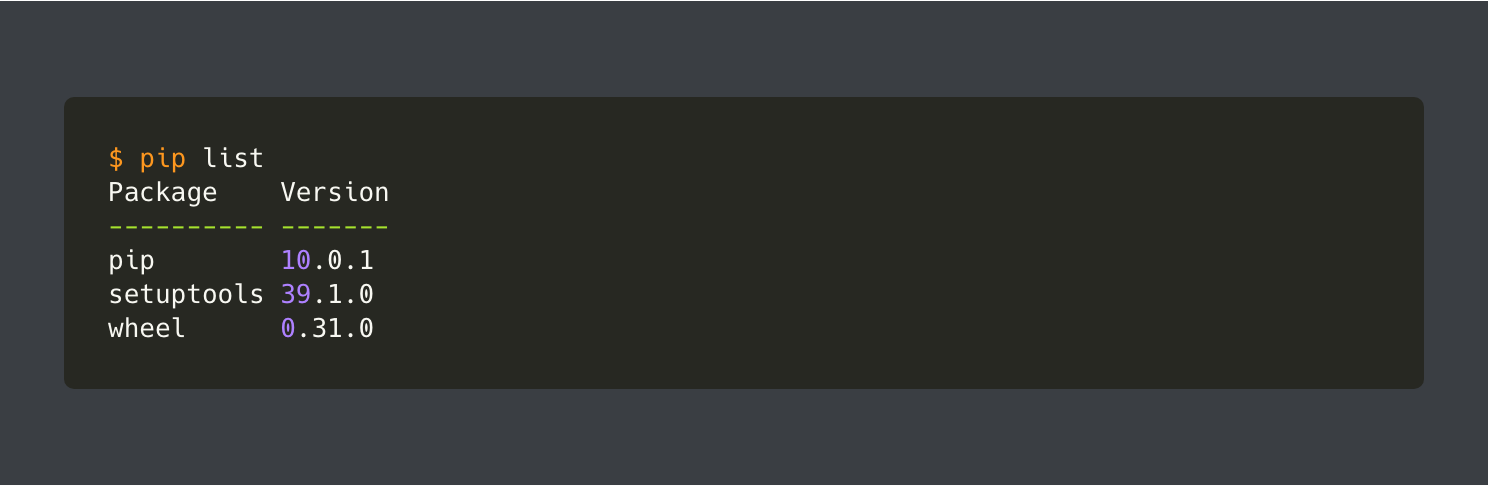

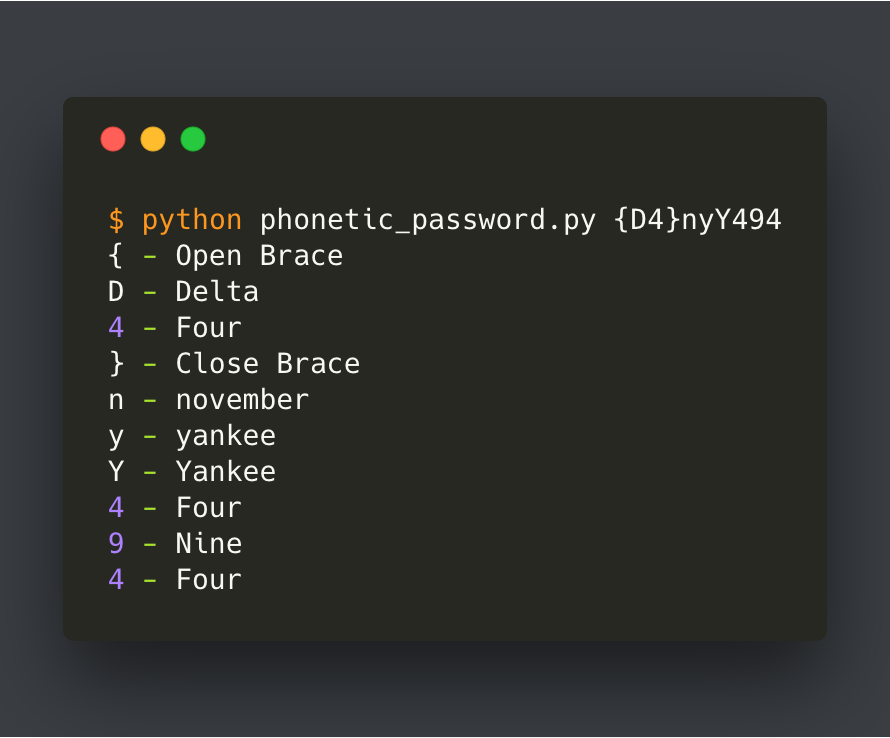

Testing New Antenna

Regardless of the heat issue, it would be better to have a longer antennae on this connector, right? And how much does one even cost? Like, 80¢ at most? Probably closer to 10¢ if buying in bulk. I have a few spare dipole antennas I purchased from AliExpress (product link) for a D1 Mini Pro project.

Since the controller housing seems to be made of extruded ABS plastic, I could leave this new antenna inside the housing for a clean look but decided it would be better to route it to the outside. (Between the power cable, zone wires, and moisture sensor plugged in there is a lot of potential for signal noise.) So I drilled a hole in the back housing to route the antenna wire to the outside (antenna PCB came with double-sided tape attached), filled the hole with hot glue, and then re-mounted the controller back on the wall. Not the prettiest but it works for me.

After re-attaching the power cable, the controller booted up and joined Wi-Fi faster than it has ever done in the past. (normally ~25-40 seconds, now ~10-15 seconds) The RSSI signal strength is greatly improved as well. I’ve had the controlled setup for a few days and it never dropped from the Wi-Fi even once, where before it was a daily occurrence. Even in 102°F (38.8°C) heat, there is no issue staying online.

Final Thoughts

So is heat the real issue or is it the age of the controller hardware? The truth is that it’s probably both. It could also be the capacitor on the CHIP antenna trace is failing. While the CHIP antenna on the AW-CU288 is impressive for its size, I really think Rachio should have spent the extra 10¢ to put an external antenna on this board. Not only does it significantly boost the signal, which would be great for people who mount their controller on the outside of their home, but it would avoid issues like this one. Should I get an updated unit in the future, I’m definitely going to open it to see if I can add a better antenna.